OCRing Music from YouTube with Common Lisp

There's a tune on YouTube I always really liked called "Supersquatting." It was written by Dubmood and Zabutom, two really masterful chiptune composers, but this track always stood out to me as sounding really full and "fat." For those who don't know, these kinds of chiptunes are usually written in "tracker" software which is a category of music composing program that was popular in the 90s and early 2000s-- they produce "module" files which are self-contained music tracks containing both the note data and samples, which could be played back quickly in a game, keygen, or just for listening. There's a culture surrounding music trackers that's inmeshed with the demoscene, video games, and software cracking, but I digress-- here's a nice in-depth explanation video about it if you're interested.

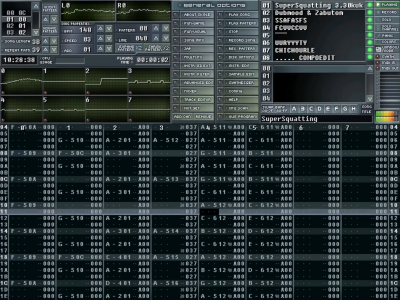

The point is, this track Supersquatting in particular sounds really full, beyond normally what I considered to be possible with the (these days) rudimentary tools (FastTracker II), so I actually assumed when I first heard it that it must have been made in a more traditional DAW with VSTs and stuff. But then Dubmood himself put up an upload on YouTube of playing the track in Skale Tracker, and I saw that it's just an 8 channel FastTracker II tune. Damn. Maybe the channels have some EQ on them or something, but still, that sounds awesome for an XM file.

Sadly I scoured the internet and couldn't find it uploaded anywhere. Supposedly (judging by the "Razer 1911 intro edit" tagline) it should be embedded in a software crack somewhere but I couldn't find any information about it, and I didn't want to go installing a bunch of old warez to check.

One nice thing about this format is that the music is, in a sense, "open source." If you have the module, you can crack it open in the editor and steal the samples, mess around with the notes, and generally see how it's done. So I really wanted to get ahold of this tune just to listen and maybe make an arrangement of my own. There's been an idea bouncing around in my head for while about "scraping" the note data from a tune like this by doing OCR on a YouTube video itself... after all, the notes are right there flowing past, so the module is practically sitting right there. When I saw this holy-grail tune uploaded to YouTube, and given I had some free time around the holidays, I figured it was time to give it a whack and see how well the idea works.

Attempt 1: Tesseract

Of all the classical OCR libraries out there, Tesseract is probably the most famous. There are a few knobs to tweak on it, but in general you just chuck your image at it and let it rip. I honestly figured this would work really well, since this is monospaced, easily readable characters that should theoretically be a perfect match for these old-skool OCR techniques. There's a Lisp binding out there for it, so I quickly grabbed it, pointed it at a sample image, and...

Wtf? I tried all sorts of techniques to pre-process the image, align the text, whatever, and Tesseract sucked every time. I'm not sure if it's optimized for print or what, but I just could not for the life of me get it to produce correct scans more than like 50% of the time. So it seems to be nearly worthless in this day and age.

Attempt 2: ChatGPT

I've historically been really impressed by GPT-4o's ability to transcribe images. This isn't scientific evidence or anything, but almost everything I've chucked at it, even minimally legible text it usually gets perfectly, so I thought that on this platonically ideal OCRable text, it should never fail... right?

Urghhh, kinda better than Tesseract, but still, wtf. Again I tried a bunch of methods to enhance the readability of this, but nothing really worked perfectly. It gets it right most of the time but then occasionally just goes nuts and puts something totally wrong. I guess that's what you get when you have "intelligence" interpreting visual data. I also tried Gemini, same situation. Looking back, maybe I should have cranked the temperature down, but regardless, this solution is a bit overkill anyway, since it's doing a separate HTTP request to a massive GPU-based model for every little chunk of text, cost a (relative) fortune, and took forever.

Attempt 3: Oldskool Pixel Diffing

ax6 on the GBDev Discord recommended that since I already know the exact forms of the characters, and they're on a fixed grid, I can just do a pixel diff with a set of the known shapes and choose the one with the lowest difference. I knew this was possible but I kind of wanted to keep the solution a little generic-- if you want to apply this method to a different tracker, different resolution, or basically anything different than the exact setup here, you'll need to painstakingly go extract out the "known" character set again and find the rectangles of interest, blah blah. But since the other 2 methods didn't work, I decided to give this a go.

I booted up Skale Tracker on my system, typed in the notes to get every known character visible, and then manually grabbed the characters in GIMP. I wrote (well, had ChatGPT write) a small script to dump the clipboard to a PNG file, and then I could just hit Ctrl-C in GIMP, double click the script, type the character name, and repeat to quickly extract out the character images... more painless than I had planned actually.

I wrote a little code to load up the directory, and a function to convert a series of "rectangles of interest" into a OCR'd string.

(defun load-dir (dir)

(loop for file in (uiop:directory-files dir)

for nsfile = (namestring file)

when (search "png" nsfile)

collect

(let ((wand (magick:new-magick-wand)))

(magick:read-image wand nsfile)

(thresh wand 0.5)

(cons wand (no-extension file)))))

(defun classify (src rect &key (x-offset 0) (y-offset 0))

(apply #'concatenate 'string

(loop for entry in rect

collect

(destructuring-bind (img-set x y w h) entry

(with-crop src crop ((+ x (* x-offset *col-offset*))

(+ y (* y-offset *row-offset*))

w

h)

(-> img-set

(symbol-value)

(alexandria:extremum #'< :key (lambda (x) (compare-images (car x) crop)))

(cdr)))))))

Cool Lisp Stuff

I included Lisp in the title so I'll give you what you came for and sing its praises a little ;)

To do image handling, I used lisp-magick-wand which is a thin wrapper around ImageMagick. One thing I like about Lisp is that you can easily wrap native code (even automatically) and then play around with it in the REPL. There's lots of really useful C/Rust/whatever libraries out there, but the traditional edit/compile/run cycle just makes iterating so clunky and slow. Being able to quickly load up the code and start messing around with it is phenomenal for productivity. To make it even better, I found that SLIME comes with a contrib called `slime-media` which lets you display images in the REPL. I quickly wrote up a wrapper function:

(defun show (wand)

(let ((name (format nil "/dev/shm/~a.png" (gensym))))

(magick:write-image wand name)

(swank:eval-in-emacs `(slime-media-insert-image (create-image ,name) ,name))

wand))

and suddenly I had the ability to interactively do image operations and immediately see the result!

I guess you could do this with Python+Jupyter as well, but I dunno, this just really feels nifty to me, like it's a natural extension of the REPL experience. It also helped massively while testing out threshold values for the image to see what would work best for classification.

I wired it all up by having FFmpeg dump out a series of BMP images to a pipe (so I could quickly parse the buffer size and read it into lisp-magick-wand) and set up a parallelized loop to call `classify` and store the parsed-out data.

(defun drive ()

(let ((process (uiop:launch-program

'("ffmpeg" "-i" "./supersquatting-trimmed.webm"

"-f" "image2pipe" "-vcodec" "bmp" "-")

:output :stream)))

(unwind-protect

(let ((output-stream (uiop:process-info-output process)))

(run output-stream))

(uiop:close-streams process))))

From there it was a simple matter of formatting the rows according to OpenMPT's paste format, and sending it to the clipboard.

Here's another neat trick for you:

(defmacro with-output-to-clipboard (&body body)

"Captures output from body forms and sends it to xclip. Returns the captured string."

`(let ((result (with-output-to-string (output-stream)

(let ((*standard-output* output-stream))

,@body))))

(with-input-from-string (input result)

(uiop:run-program '("/usr/bin/xclip" "-in" "-sel" "clipboard")

:input input

:force-shell nil))

result))

So then I can just do:

(with-output-to-clipboard (print-all-orders))

and paste the result directly into OpenMPT. The crazy thing is, it actually worked:

Well, it doesn't sound nearly as good as the original module, but that's because we're missing all the original samples-- I just replaced most of them with a square wave. There are also a few "typos" from video artifacts causing improper classification of the letters. Meh, good enough, my curiosity about this particular "nerd snipe" is satisfied for now. Now that I (mostly) have the note data for this track, I'd love to do an arrangement for the OPL3 and include it in a game I'm making... just need to ask Dubmood and Zabutom for permission first :)

Code is all here.

Addendum

It has nothing to do with Lisp, but if you like fun video processing problems and want to work with me, apply to Recall.ai ;)